Learning From Disaster

+ BY CHARLES Q. CHOI

+ ILLUSTRATION BY I-SHAN CHEN

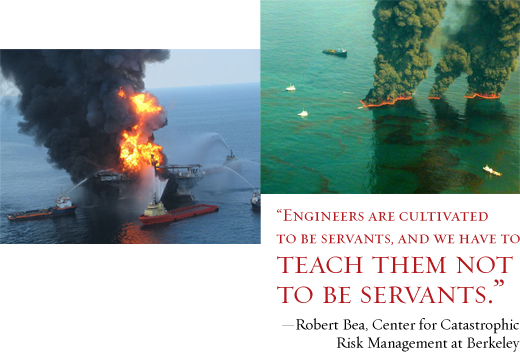

The Gulf oil spill holds powerful lessons for future engineers.

On April 20, executives from British Petroleum, which leased the Deepwater Horizon, and from Transocean, which owned it, flew to the Gulf of Mexico to celebrate the oil rig’s outstanding seven-year safety record. Hours later, well-being gave way to horror when gas surged upward from the well 3 miles below and exploded, killing 11 workers, injuring 17, and starting a gusher that would foul the Gulf with more than 170 million gallons of oil.

The tragically misplaced confidence evident at that celebration is just one of many cautionary lessons in the worst man-made environmental disaster in U.S. history. “We had a giant science experiment out in the Gulf of Mexico, and if we didn’t prevent it, we could at least learn from the miserable experience,” says Robert Bea, cofounder and principal researcher of the University of California at Berkeley’s Center for Catastrophic Risk Management. From “fail-safe” measures that failed to unheeded warnings, inadequate inspections, and unstable cement, the Gulf oil spill provides a series of teachable moments — technical, theoretical, and professional.

Calamities like the spill from the Macondo well “are like the Aesop’s fables for our day,” says Gary Halada, a professor of materials science and engineering at New York’s Stony Brook University who teaches a course called “Learning from Disaster.” He adds, “Engineering disasters are especially teachable because they make strong impressions on students that can get past barriers to learning.”

What Risk Requires

One lasting impression, according to Halada, is the impact that engineering can have on society. In the case of the Gulf oil spill, the impact begins with lives lost and continues on to include damaged livelihoods and spoiled natural habitats. This lesson is particularly important for students to absorb in connection with engineering projects involving risk and experimentation. Deepwater Horizon held plenty of both. Floating 52 miles off the Louisiana coast in 5,000 feet of water, the rig dug a well 13,000 feet beneath the ocean floor over 74 days. Although the platform had drilled even deeper wells in the past, this kind of drilling poses extreme hazards, comparable to flying to the moon or Mars, says Bea, an offshore drilling expert who once consulted for BP.

Were there fail-safe measures? Yes. If the crew lost control of the well and a dangerous blowout did come — a wild gush of oil and gas — a 450-ton, five-story stack of shut-off valves known as the blowout preventer, or BOP, was supposed to serve as the last line of defense. It’s equipped with several pipe rams – sealing elements that fit around a drill pipe — that could seal off a section of the pipe if necessary.

“The BOP is ultimately a backup measure,” says Tadeusz Patzek, chair of the department of petroleum and geosystems engineering at the University of Texas at Austin. “Oil well drilling plans should be conducted in such a way that the BOP is never used, so that there are multiple barriers used in addition to and separate from the BOP. That reduces the chances of failure considerably.” But if BP’s 2009 response plan is any indication, preparation for a potential Gulf of Mexico oil spill was perfunctory. Filled with omissions and errors, the document cited a wildlife expert who had been dead since 2005 and species that lived nowhere near the area.

Big as it was, the BOP may not have been sturdy enough, Bea suggests: “Countries such as Canada have been terrified of what we’ve been doing with oil rigs in the Gulf of Mexico for more than two decades. Their blowout preventers are more expensive, heavier, and bigger, and they are robust as hell.”

When it was needed, the BOP failed, for reasons still not fully explained. This worst-case scenario should have triggered a powerful “blind shear ram,” designed to slice through the drill pipe completely and seal the well. But none of the three different ways to activate the blind shear ram worked. One, called a “dead-man switch,” turned out to be hooked up to a dead battery. Even had it been activated, the device might not have worked; according to a BP report, it had been leaking hydraulic fluid.

The lesson here? “Engineers worship redundancy, but redundancy by itself can be a killer,” Bea says. Redundant parts are useful when they’re independent of each other. When they’re not, as with the multiple pipe rams and ways to activate the blind shear ram, “you’re dead,” Bea says. The pitfalls of redundancy should be well known. Henry Petroski, professor of civil engineering and history at Duke and a Prism columnist, teaches a lesson from Galileo, the 16th- and 17th- century astronomer and physicist, involving a beam supported at both ends. “People worried about it breaking, so they put an extra support in the middle.” But the new support turned out to be more substantial than the other two. “Eventually the beam settled and rested at the middle, with the ends falling downward,” Petroski explains. As a result, the beam collapsed. “The change to the system introduced a new mode of failure. When you add a redundancy, you have to reanalyze the system from scratch.”

Investigations have revealed technical shortcomings and apparent failure to heed warnings. For instance, Deepwater Horizon’s blowout preventer had only one blind shear ram; a third of the rigs in the Gulf now have two. In addition, one of the rig’s secondary pipe rams had been replaced with a test ram. This is a common industry practice that serves to reduce operational and other costs, but it means the pipe ram would have been useless in an actual blowout. BP also did not use a steel liner tube in the final section of the well that might have kept the explosive gas from surging up the pipe. It would have cost an extra $7 million to $10 million.

Halliburton, the company responsible for cement in the well shaft, recommended using 21 “centralizers” to position the pipe that ran down the center of the well. These help reduce risk by ensuring an even cement job that contains no gaps where gas can squeeze through. BP used just six. Halliburton’s own performance has been called into question: Tests performed before the deadly blowout showed the cement used in the well was unstable.

When the leak in the blind shear ram was discovered, operations should have been suspended, as demanded by regulations. They weren’t. In another apparent lapse, an equipment assessment revealed that many key components had not been fully inspected since 2000, even though guidelines require such inspections every three to five years.

Slippery Slope

Perhaps the most important lesson from Deepwater Horizon is that people can at times be the weakest links in the system. “I often tell my classes that they spend a great deal of time learning about physics and mechanics and chemistry, and that turns out to be about 10 percent of the problem of engineering successful systems,” Bea says. “The rest turns on human things.”

Conscientious engineers are taught the importance of completing often long and complex checklists. “It might seem boring, but when people don’t, that’s when problems start to happen,” Halada says.

Ignored warnings and missed opportunities to correct problems start a “long slide down the slippery slope of incremental negative decisions,” Bea adds. This has come to be known as “normalization of deviance,” a term coined by sociologist Diane Vaughan, then at Boston College, to explain the human failings that contributed to the failure of NASA space shuttle Challenger. Patzek explains, “You have this increasing sense of false safety, that we’ve been doing this so long and gotten away with it so many times that what we do is therefore inherently safe.”

Urgency can aggravate human frailties. While investigators are divided on whether BP, Transocean, and Halliburton cut corners for the purpose of cutting costs, there were strong pressures to speed up the operation. At the time of the explosion, drilling was about six weeks behind schedule and 61 percent, or nearly $60 million, over budget.

“Every industrial and research endeavor has to have a single goal, and you cannot have multiple competing goals,” Patzek said. “If you feel safety is number one, then you cannot say that safety is No.1 and that the job should be done at the lowest cost and that the turnaround time should be the shortest possible.”

Finally, Deepwater Horizon underscores the need for engineers to be trained to speak out when they see something wrong. “Engineers are cultivated to be servants, and we have to teach them not to be servants,” Bea says. “We tend to see ourselves as simply providing information for other people to use in reaching decisions.” A confidential survey of workers aboard the doomed rig, commissioned by Transocean weeks before the explosion, showed that many often saw unsafe behavior and malfunctioning equipment aboard the rig and were afraid of retaliation if they reported problems. The risks to the whistle-blower can prove daunting. That, says Petroski, points up the need to prepare future engineers with communicating and debating skills. “That way you can make the case about why to not cut corners. A student who is articulate and well-versed in historical case studies can, by analogy, argue about the risk of failure. . . . It’s about learning how to be persuasive.”

Charles Q. Choi is a freelance writer based in New York.

Category: Features