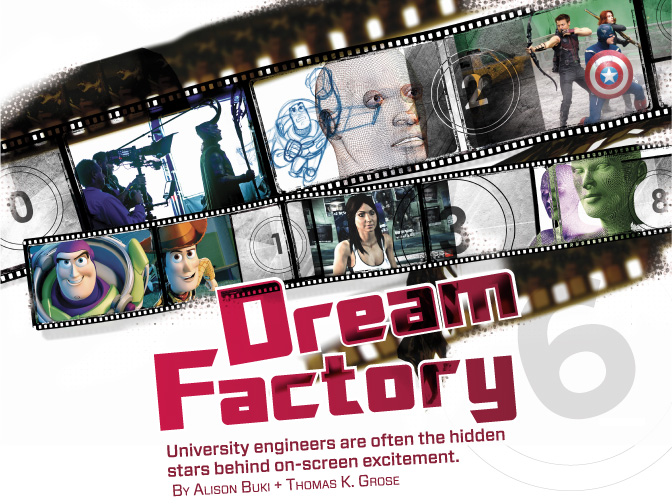

Dream Factory

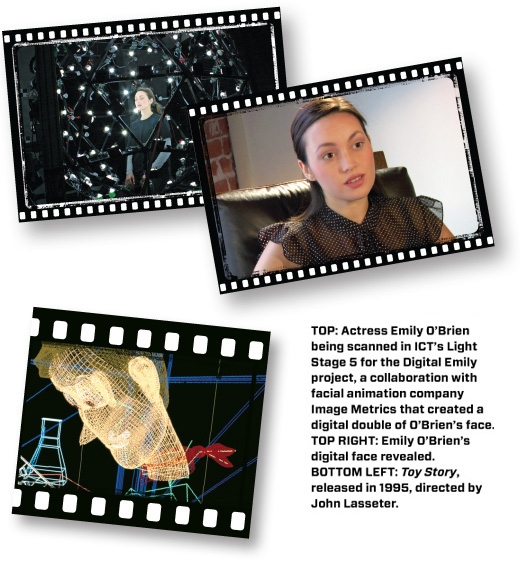

The Curious Case of Benjamin Button charmed moviegoers with the story of a man whose life unfolds in reverse, from wizened oldster to cherubic baby. The 2008 film, starring Brad Pitt, raked in $333.9 million and won three Academy Awards, including one for visual effects. And these effects were indeed amazing. For nearly a third of Button’s 166-minute running time – 325 frames – the title character wasn’t Pitt at all but instead a virtual Benjamin, who seamlessly interacted with humans on screen. This was achieved largely by an image-capture and simulation system called Light Stage, which digitally processes the full dynamic range of light in a scene and uses the data to relight virtual characters with an accuracy that makes them appear more realistic and alive.

Light Stage inventor Paul Debevec, who shared a separate Scientific and Engineering Oscar with three colleagues in 2010, has spent 13 years working with movie studios on the special effects that turn Hollywood movies into blockbusters. But that’s not his day job. He is a research professor at the University of Southern California and heads the Graphics Laboratory at the school’s Institute for Creative Technologies.

Debevec is hardly unique. With a never-ending appetite for discoveries and inventions emerging from cutting-edge research, filmmakers and game publishers have formed symbiotic relationships with many engineering and computer science academics at top research schools, including USC, Carnegie Mellon, Georgia Tech, and MIT. “They are tasked with showing people things that nobody’s ever seen before, every single summer. And that’s a tall order to fill,” Debevec explains. “That’s why they’ve become very important early adopters of new technology.” There’s a payoff for researchers, too. Consider the windfall bestowed on Carnegie Mellon’s computer science faculty and students four years ago with the opening on their campus of Disney Research, Pittsburgh, a lab specializing in radio, antennas, and sports visualization.

University-based special effects engineers help keep innovation humming in an industry where the United States still leads the world. U.S. films generated about $80 billion in revenue in 2010, according to federal figures, and the U.S. industry enjoys as much as a 90 percent market share in some countries abroad, producing a trade surplus of nearly $12 billion in 2009. The Motion Picture Association of America claims the film industry supported 2.2 million U.S. jobs and paid nearly $137 billion in wages in 2009. The video game industry is also huge. Research consultants at Gartner estimate that the combined value globally of the film industry’s software, hardware, and online sales will jump from $74 billion in 2011 to $112 billion in 2015.

From King Kong to The Avengers

From the early days of film, technology has always been central to the industry’s success. Thomas Edison devised the classic motion picture camera and sprocketed film in the late 19th century, an invention that’s only now giving way to digital cameras. Movies flourished in the silent era, but went into overdrive when The Jazz Singer, starring Al Jolson, launched the “talkies” in 1927. By the time Greta Garbo uttered her first on-screen line in the 1930 film Anna Christie – “Gif me a vhisky, ginger ale on the side, and don’t be stingy, baby!” – a majority of U.S. and British theaters were equipped for sound.

The 1933 King Kong, one of the earliest special effects-driven megahits, used stop-motion photography to make the giant ape appear alive. This technique, which requires shooting models that are moved ever so slightly for each shot, reached its apogee in the 1960s and ’70s, when famed animator Ray Harryhausen made such classics as Jason and the Argonauts and The Golden Voyage of Sinbad. Movies in 3-D debuted in the 1950s as a way for the industry to compete more successfully against television. They quickly fell out of favor, but new digital technologies have boosted 3-D’s popularity anew.

George Lucas’s 1977 Star Wars kicked off the current era of special-effects superhits. All of the biggest box office winners since then – Jurassic Park, Terminator, Toy Story, Titanic, Lord of the Rings, Harry Potter, Avatar and this year’s The Avengers – have owed much of their success to the magic created by special-effects engineers. It was, in fact, the 1993 release of Steven Spielberg’s technologically groundbreaking dino-romp Jurassic Park that stoked Debevec’s fascination with visual effects. Then a graduate student at the University of California, Berkeley after earning a B.S. in electrical and computer engineering from the University of Michigan in 1992, he became interested in exploring the problem of how to integrate, realistically, computer-generated objects and people into pre-existing environments. “I just thought it would be cool to try to do it scientifically right,” Debevec says, noting that Spielberg’s designers “were doing it basically all by eye and by sheer force of artistry.” In 1997, while still a grad student, Debevec invented a system of image-based modeling that could create realistic virtual replications of buildings. Those techniques were featured two years later in the artificial-intelligence thriller The Matrix.

The award-winning Light Stage system, used in Avatar and Spiderman 3 as well as Button, focuses on capturing and simulating humans in real-world illumination. It has continued to evolve over the past decade, since Debevec built the first version on a wooden stage with a single spotlight. Light Stage X is the most current and technologically advanced of Debevec’s systems. A geodesic half-dome structure, the stage stretches 9 feet in diameter and boasts 346 LED light units placed evenly throughout, each with its own microprocessor that connects to a larger main computer.

Mobile Apps and the Wii Console

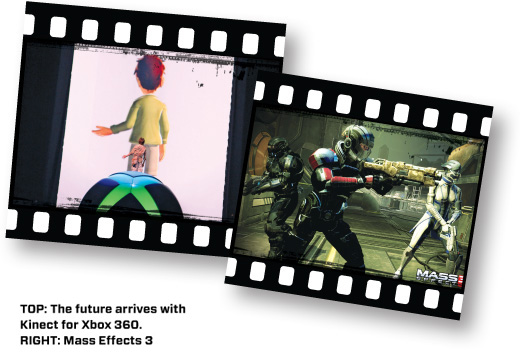

Movie companies tend to be first-users of visual technologies, which later migrate into video games. But Debevec expects that within a few years games will be offering the same kind of innovations almost simultaneously with film. Since its start in the early 1970s with arcade games like Pong and Pac-Man, the industry has soared as games went from tape cassettes to CD-ROMs to DVDs to consoles. The biggest growth area today is wireless broadband and mobile app-based games. Also popular are hands-free, motion-sensing games, first introduced in 2006 by Nintendo with its Wii console. Competitors at Sony and Microsoft have since rushed to create similar products. In 2010, Microsoft debuted Kinect for Xbox 360, a motion-sensing peripheral that allows users to become the game controller through gestural input and voice commands.

With 8 million units sold in the first 60 days, Kinect set a Guinness world record for the fastest-selling consumer electronics device. Engineers and programmers were quick to see that its 3-D depth sensor, multiarray microphone, and a state-of-the-art video camera could be used for myriad other products. Soon, hackers were rigging Kinect to a quadrotor for autonomous navigation, and programming it to remotely control humanoid robots. Microsoft initially was inclined to fight the hackers but now is rolling out a special version for them and has become a partner in a Kinect Accelerator to fund Kinect-based ventures.

Besides exploiting game technologies for other purposes, engineers are working on better games, ones that help players become action heroes, athletes, and pop stars. At Carnegie Mellon’s Entertainment Technology Center, four graduate students are looking to take motion-sensitive game play to the next level by adapting it to the more complex world of fighting games, also known as “hack and slash.” Patrick Jalbert, Adam Lederer, Pei Hong Tan, and Chenyang Xia recently completed a group project called Action in Motion, which features a short action-oriented game where players control the movements of a fighting 3-D animated robot through gestures.

Since few Kinect players can perform the flashy martial arts moves frequently seen in fighting games, a main challenge for the Action in Motion team has been fostering a sense of natural continuity between the users and their avatars. Through a combination of sophisticated animation blending techniques and many hours of player testing, the group has created a system of gestural controls that lets users intuitively guide their robot avatar through a variety of combat situations while also looking dynamic and fun. To accomplish this, “you need to mix in some animation and poses,” says Jalbert, the team’s animator. The player “performs the action, and then the character will do it in a more theatrical way.” A demonstration of the game proved very popular at an ETC project fair last May. “We had some younger players that we couldn’t tear away from it,” Lederer says. The Carnegie team hopes to sell new, more action-oriented Kinect adventures: “This game is a proof of concept that something like this can work. We are hoping to inspire people . . . to create different kinds of games on the Kinect,” Lederer says.

Another technology that’s still in the lab but holds huge potential for the film, television, gaming, and music industries is holography, the transmitting of life-size – or larger – holograms, even in real time. This technology is likely to get its start in gaming – with holographic avatars that initially are much smaller than life-size – before jumping to TV or movie screens, says V. Michael Bove Jr., director of the Object-Media group at the MIT Media Lab. “Gaming looks like a clear case, because it’s easier to do on a desktop or tablet,” Bove says. “For the big screen, it’s harder to scale up.” But what about the life-size “hologram” performance of the late rapper Tupac Shakur that thrilled the crowd last April at the Coachella music festival? It turns out that the technology used to resurrect Tupac – who died of gunshot wounds in 1996 – wasn’t what it claimed to be. “That wasn’t really a hologram,” Bove explains. “From an engineering perspective, the optics behind it were 150 years old.” It was instead an optical illusion created by projecting an image onto a half-silvered, angled mirror. The result is an image that looks as if it’s onstage. The Tupac stunt did, however, prove that demand for real holographic entertainment could be strong. An MIT holography spinoff, Zebra Imaging, is working to make that happen.

Bove’s team, meanwhile, created a buzz at an early 2011 holography conference in San Francisco when it used a Kinect camera to transmit a holovideo in real time that moved at 15 frames per second. Since then, his team has increased the rate to the 24 frames per second required for feature films and the 30 frames per second needed for television transmission. Bove argues that true holovideos will be much more three-dimensional than current 3-D technologies, and users won’t need to wear silly glasses to see it. The 3-D techniques used in films do not have motion parallax, which makes focusing hard. “That’s not so bad on the big screen,” Bove says, “but in the living room, on a desktop or a table, you want to refocus to different distances, so it’s uncomfortable. With holograms, you don’t have that problem.” Also, with current 3-D technology, audience members see the same image no matter where they’re sitting. With a hologram, viewers see different sides of the image depending on where they’re sitting – screen-left, right, or center – which is more realistic. In the future, holography will have a role to play in the visualizing of the massive amounts of data collected by supercomputers, Bove says. “Holograms are perfect for that. That is certainly going to happen.”

History of the Universe

Technologies that make superheroes and space aliens come alive in movies and computer games have applications far beyond pure entertainment. Stanford University’s Kavli Institute for Particle Astrophysics and Cosmology (KIPAC) is engaged in what might be the ultimate reverse-engineering project: reconstructing and visualizing the universe’s early days. The project draws on discoveries of phenomena like cosmic background radiation (traceable remnants of the big bang), quasars (black holes), and pulsars (rotating neutron stars) that have deepened our understanding of the history of the universe. Tom Abel, an associate professor of physics and head of KIPAC’s computational physics department, leads a team of more than 200 faculty, students, and postdocs that uses supercomputers to re-create events in the universe, or “predict the past,” as he puts it.

In KIPAC’s dazzling renderings of the cosmos in flux, multicolored clouds of space dust swirl entrancingly as stars form and galaxies collide. Each animation lasts two or three minutes, but the periods each one depicts could range from the few milliseconds of a supernova to billions of years of cosmic history. Beyond their aesthetic appeal, these visuals offer a novel way to conceptualize events typically beyond human imagination. Accordingly, KIPAC animations have been used in planetarium shows at the American Museum of Natural History in New York City and at the California Academy of Sciences in San Francisco. Viewers experience the shows in 3-D at high-definition resolution, with dual projectors casting the animations onto a massive panoramic screen.

KIPAC tackles numerous engineering challenges, most significantly in developing software capable of crunching trillions of data bytes. “Everything from running the models to the final visualizations are all custom-written,” Abel says. The man responsible for transforming data sets into stunning animations is Ralf Kaehler, head of the Visualization Lab at KIPAC, an applied mathematician by training. Kaehler has developed algorithms that convert data created by a supercomputer (which calculates variables like size, brightness, temperature, and distribution of matter in space) into colorful 3-D images using iterative techniques and state-of-the-art graphics cards. Cinematic elements, such as camera movement, zooming, and pacing, are also used to add visual flair and to enhance viewers’ comprehension. Abel sees potential for modeling and visualizing the huge amounts of data collected in areas ranging from natural phenomena, such as long-term weather patterns, to nuclear fusion reactions.

Video game special effects increasingly are found in instructional materials. Earlier this year, the Pentagon and FBI acquired licenses from Epic Games for the same engine used for Mass Effect 3 and Infinity Blade. The Pentagon will use it for, among other things, training intelligence analysts and medics.

Many Players

A common thread in all special effects engineering is its multidisciplinary nature. Bove’s holography team at MIT includes chipmakers, experts in digital signal processing, optics specialists, and, he notes, “mechanical engineers, because eventually it all has to be packaged into a box.” To foster collaboration across disciplines, USC’s Institute for Creative Technologies was deliberately established as an independent center, not part of a college or department. At Georgia Tech, Blair MacIntyre, an associate professor of computer science, is an expert in augmented reality (AR), where users enhance their real environment with digital information – including 3-D graphics and pictures – in real time, often via the viewer of a smartphone camera. “It’s still relatively niche-y,” MacIntyre says. But eventually, he believes, the technology will move beyond smartphones to a hands-free headset, providing games that immerse players in a sci-fi novel. To do that, he says, requires a host of experts, including computer vision and graphics specialists. “Games basically touch all of the computer sciences disciplines.” And others, too: One of MacIntyre’s projects is the Augmented Reality Game Studio, where students and faculty from Georgia Tech work with colleagues from the Savannah College of Art and Design and the Berklee College of Music to develop mobile AR games.

As in so much of information technology, advances in special effects may bring down the entertainment industry’s blockbuster budgets. James Cameron’s Avatar cost an eye-popping $237 million. It now takes $10 million to develop a major-title computer game for a single console platform. But Debevec predicts that within a decade, the cost of high production-value technologies will be within reach of small-budget auteurs: “I think the technology will really be mature when an independent filmmaker can use every single tool that was at the disposal of James Cameron when he made Avatar.”

Special-effects technologies have revolutionized film and turned electronic games into a major global industry. Soon they will penetrate the field of advanced computing. The next generation of so-called exascale supercomputers – 1,000 times faster than today’s – won’t use central processing units (CPUs), which would melt from the heat generated. Instead, computer engineers are turning to the general processing units (GPUs) used in games. GPUs are able to run many computing tasks simultaneously, and a single GPU uses one-eighth the energy per calculation of a CPU. Supercomputers running on Sony PlayStation 3 chips? Now that is a special effect.

Alison Buki was, until recently, an ASEE staff writer. Thomas K. Grose is Prism’s chief correspondent.

Category: Cover Story