Delivering Big Data

+ By Thomas K. Grose

When Superstorm Sandy slammed ashore 5 miles south of Atlantic City, N.J., the night of October 29, where and when it hit came as no surprise. Thanks to supercomputers able to sift through ever higher mountains of data – collected from planes that fly into storms, satellites, ground stations, and weather balloons – and advances in modeling technology, forecasters had pretty much pinpointed 48 hours earlier where it would make landfall. Evan a four-day tracking forecast “is as accurate as was the two-day forecast just 15 years ago,” says Ed Rappaport, deputy director of the National Hurricane Center. “In other words, communities have gained two days of preparation time.”

Forecasters’ success in tracking Sandy, one of the worst storms ever to hit the East Coast, offers a high-profile example of the ways scientists and researchers harness and extract wisdom from a growing deluge of digital information, known by the buzzwords Big Data. Measured in petabytes, terabytes, and zettabytes, Big Data is no passing fad. Researchers predict that a capability to use artificial intelligence tools to cope with, analyze, and combine hoards of disparate data sets, structured and unstructured, will unleash countless breakthroughs in science, medicine, commerce, and national security, ultimately helping us to live healthier, safer, and more enjoyable lives. “There is simultaneous interest in Big Data from academia, government, and industry, and that bodes well,” says Naren Ramakrishnan, a professor of engineering in Virginia Tech’s computer science department. A report last year by the World Economic Forum called Big Data a new class of economic asset. In healthcare alone, says the consulting firm McKinsey & Co., effective use of Big Data could create more than $300 billion in value in a year, with two thirds of that coming from annual reductions in costs of around 8 percent.

Recognizing Big Data’s potential, the Obama administration announced last March that it would spend $200 million on a research-and-development initiative – via such agencies as the National Science Foundation, the National Institutes of Health, and the Departments of Defense and Energy — to improve ways to access, store, visualize, and analyze massive, complicated data sets. For example, the Energy Department is spending $25 million to launch a new Scalable Data Management, Analysis and Visualization Institute at its Lawrence Berkeley National Laboratory. And beyond the White House initiative, the Pentagon is spending in additional $250 million on Big Data research.

The term Big Data is actually an understatement. The amount of global data should hit 2.7 zettabytes this year, then jump to 7.9 zettabytes by 2015. That’s roughly equivalent to more than 700,000 Libraries of Congress, each with a print collection stored on 823 miles of shelves. A zettabyte is two denominations up from a petabyte. Big Data is also fairly new: IBM estimates that fully 90 percent of the data in the world today didn’t exist before 2010. Where does it all come from? Well, a short list would include news feeds, tweets, Facebook posts, search engine terms, documents and records, blogs, images, medical instruments, RFID signals, and billions of networked sensors constituting the Internet of Things. “The amount of data is going sky high,” says Mark Whitehorn, a professor of computing at Scotland’s University of Dundee. “There’s a reason why it was not collected back in the day.”

A visualization created by IBM of Wikipedia edits. At multiple terabytes in size, the text and images of Wikipedia are a classic example of big data. WIKIPEDIA: VIEGAS

Early Indicators

Cloud computing – networks of thousands of warehouse-size data centers – means we can now collect and store vast amounts of data at relatively low cost. And the processing power of today’s supercomputers means it can be searched and crunched – again, at little expense. That capability is powering EMBERS (for early model-based event recognition using surrogates), a multiuniversity, interdisciplinary team headed by VT’s Ramakrishnan that uses “surrogates” to predict societal events before they happen. What are surrogates in this context? Well, if you fly over a country at night, luminosity can give you a pretty good gauge of its economic output. The points of light below can, for instance, indirectly indicate how much big industry it has and its locations. That makes luminosity a surrogate. And when you have enough surrogate information, it can also act as an early indicator of things to come. That’s certainly true of the mounds of public information now available to researchers in massive data sets. Everything from news feeds to tweets to search-engine commands are chock-full of myriad surrogates that, properly mined and analyzed, can forecast a range of events, from epidemics to violent protests to financial market swings.

These days, Ramakrishnan is engulfed in a sea of surrogates. In May, EMBERS won a three-year contract potentially worth $13.36 million from the Intelligence Advanced Research Projects Activity, the research arm of the Office of the Director of National Intelligence. The group was tasked with creating algorithms that can issue early warnings of potentially destabilizing events in Latin America, such as strikes, spread of infectious disease, or ethnic empowerment movements. Such events might be predicted based on changes in communication, consumption, and movement in a population. “It’s a big challenge,” Ramakrishnan says of tracking and analyzing rapidly changing data. “How do you do it automatically, and on the fly?” When an earthquake hit Virginia last year, Ramakrishnan notes, tweets from the epicenter were read by New Yorkers before the quake’s shock waves rattled the city mere seconds later.

To make worthwhile use of this information, researchers need to design new algorithms that can burrow through petabyte-level data sets and find insights. “The statistical tools we have were developed for a much different, smaller scale,” says Jan Hesthaven, a professor of applied mathematics at Brown University. The algorithms must also enable users to keep pace as the amount of data expands, explains Michael Franklin, a professor of computer science at the University of California, Berkeley. “As data grow to the petabyte level, it slows algorithms,” says Franklin, whose Algorithms, Machines, and People Lab (AMPLab) was awarded $10 million from the Obama initiative. Moreover, as these systems add more and more computers, the likelihood of partial system failures grows. So algorithms also need to be fault tolerant. In many cases – as with EMBERS – it’s also necessary for algorithms to work in real time as data keep streaming in, and from data sets that are very different from one another and not always that clean. “The ultimate goal is to perform analyses on terabyte and petabyte amounts of information that are constantly changing,” says Adam Barker, a computer science lecturer at the University of St. Andrews.

Of course, algorithms developed for one set of problems can also be applied to other sorts of data. Algorithms created to track, say, proteins, might also be useful in scoping out market anomalies. It all comes down to looking for patterns. “The basic math does not change,” Ramakrishnan says. That said, algorithms will have to be tweaked to answer the questions being asked. If data mining is looking for the proverbial needle in the haystack, then “some people care about the needle, others care about the hay,” Hesthaven says. Data scientists often must work with specialists in other disciplines. The EMBERS group collaborates with experts on Latin America who would know, for instance, if a flood of search-engine queries for a certain locally popular herbal remedy signals an epidemic. With calibration from experts on other regions, the models EMBERS develops could be used to detect emerging problems elsewhere in the world.

If supercomputers can say where and when a hurricane hits the coast, they can also predict wind patterns and enable power companies to overcome the problems caused by intermittent sources of energy, such as turbines. Researchers at the Argonne National Laboratory have put their supercomputers to use to make wind power forecasts more accurate and begun developing tools to predict the impact of wind power on systemwide electric grid emissions. Argonne’s supercomputers have also been used by Boeing engineers as virtual wind tunnels to simulate the effects of turbulence on aircraft landing gear.

Detecting Anomalies

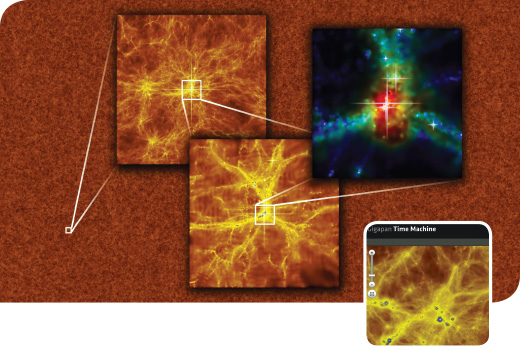

The flip side of simulations is visualizations, presenting data graphically so they’re more easily understood by scientists. “We humans are very good at detecting anomalies and changes if data are presented to take advantage of that characteristic,” says Robert Calderbank, dean of natural sciences at Duke University. David Ebert, a professor of electrical and computer engineering at Purdue University, agrees: “The fact is that humans have evolved an ability to process information quickly, visually. People trust their eyes.” Carnegie Mellon University robotics researchers have created a browser called Gigapan Time Machine, which visualizes simulations built from archived data. It allows users not only to pan and zoom in and out of high-resolution videos but also to move back and forth in time. For example, you could focus on a building’s details, pull back for a panoramic view, or jet back into time to watch the building being built. This feature allows, say, astrophysicists to watch a simulation of the forming of the early universe, and to also zoom in to look at a specific region in detail. (For more on visualizations, see this month’s Up Close).

Using massive data sets, Carnegie Mellon’s Gigapan Time Machine lets viewers interactively see an image change over time. Here, it captures the early development of the Cosmos. IMAGES COURTESY OF CARNEGIE MELLON UNIVERSITY

Beyond interpreting information and providing it in a form that’s easy to understand, effective use of Big Data requires being able to find what’s relevant in a data set. That’s where the Semantic Web comes in. It’s an effort to post data online in such a way that software crawling through them can understand the meaning behind the data. Jim Hendler, computer science department head at Rensselaer Polytechnic Institute and a pioneer of the Semantic Web, says that in nearly 30 years of research almost every project he was involved with began with the question: Where do we get the data? Now it’s: How do we find exactly what we need? “We went from how to find information to how to filter it,” Hendler says, and the Semantic Web works as a filter.

Your DNA on a Card

In the foreseeable future, Big Data will probe more deeply into the mysteries of the universe. Franklin’s AMPLab, for instance, aims for algorithms that help find Earth-like planets. We’ll also get increasingly reliable climate-change models, faster medical research, and more precise delivery of healthcare. But perhaps the greatest breakthroughs will emerge from work that builds on the Human Genome Project. The ability to sequence a cancer patient’s specific genome to come up with tailored therapies is within reach. “That’s a big one,” Franklin says. “Next to that, everything else pales.” Indeed, it’s likely we’ll one day carry credit cards with our DNA sequenced on them to help doctors predict, and perhaps diminish, our risk of contracting various diseases.

A team at Dundee, meanwhile, is at work mapping the human proteome dynamics, a project more complex than mapping the genome because there is no single proteome, and the properties of thousands of cell proteins constantly change. Currently, many potentially useful drugs don’t reach the market because they could harm just a tiny fraction of the population. But since drugs work on cell proteins, knowledge of a patient’s proteome would let a physician find out, with a quick test, which drugs can be administered safely. In two to three years, the Dundee project is expected to yield one to five petabytes of data. “The computers of 10 years ago could not do this,” Whitehorn says. In as little as five years’ time, he adds, “we can improve the lives of a lot of people by producing better drugs.”

From the hospital bed to the street, Big Data holds the potential for saving lives, easing traffic congestion, fighting crime, helping first responders in emergencies, and gathering intelligence. The U.S. Department of Transportation is funding a $25 million Michigan study of 3,000 smart cars, buses, and trucks that have built-in data recorders and communicate with one another wirelessly to help avoid collisions and also warn drivers of hazardous conditions. Surveillance cameras could become more effective once they “have software behind them to try to predict crimes” based on silently recorded patterns of activity, Hesthaven says. Mind’s Eye, a project of the Defense Advanced Research Projects Agency, is working on smart surveillance cameras that would collect images and forward only those relevant to intelligence collectors’ needs.

At Purdue, Ebert’s Visual Analytics for Command, Control, and Interoperability Environments (VACCINE) lab, funded by the U.S. Department of Homeland Security, is developing technologies for turning massive amounts of data into useful intelligence for domestic security personnel, including first responders. One project is working on gleaning information from photos, videos, sensor data, and 3-D models and crunching it into useful visual analytics that can be sent to mobile devices. This can include locations, time, temperatures, and possible toxins. “We ask, ‘What is the most salient information [responders] need?’” Ebert explains. Another VACCINE project is developing an automated algorithm that can detect possible plots or threats from “vast collections of documents.” While algorithms are great at scanning and finding patterns in mountains of data, “we need to keep humans in the loop” to ensure accuracy, Ebert says. For instance, while monitors may detect that hundreds of burglar alarms are going off in one area, a machine won’t draw any inference from that. But a human might quickly deduce there’s been an earthquake.

For supercomputers really to deliver the right information on demand, they need to communicate in natural language. At least, that’s the theory behind IBM research involving Watson, the machine famed for winning the game show Jeopardy!, which can digest and analyze 200 million pages of data in three seconds. Currently assisting medical researchers at Los Angeles’s Cedars Sinai Cancer Institute, soon to be joined by the Cleveland Clinic Lerner College of Medicine at Case Western Reserve University, Watson could ultimately not only help physicians make diagnoses but also be able to take questions from doctors in natural language. And if it can grasp words, why not read? At Carnegie Mellon University, the Never-Ending Language Learner, or NELL, is teaching itself to read and comprehend information from millions of Web pages. So far, it has accumulated more than 15 million “beliefs,” and is “highly confident” that 1.8 million of them are accurate. For instance, it’s 93.8 percent certain that “dairy cow is a mammal.” OK, so it’s in the early days.

Not surprisingly, Big Data has re-energized computer science schools and departments that saw flat or declining enrollment not many years ago. Academics say classes are now mostly full and new courses and degree programs in data science are being added. Berkeley’s Franklin says his AMPLab has 18 corporate sponsors, in part because they’re keen to recruit his graduates. McKinsey & Co. forecasts that within six years, the United States could face a shortage of up to 190,000 people “with deep analytical skills.” Says Barker: “There are so many jobs in this area right now that Big Data engineers and data scientists can pretty much name their own salaries. There is not enough talent to fill the jobs.” For students, that means Big Data is a big deal.

Thomas K. Grose is Prism’s chief correspondent, based in London.

Category: Cover Story